Image Restoration for Under-Display Camera

Abstract

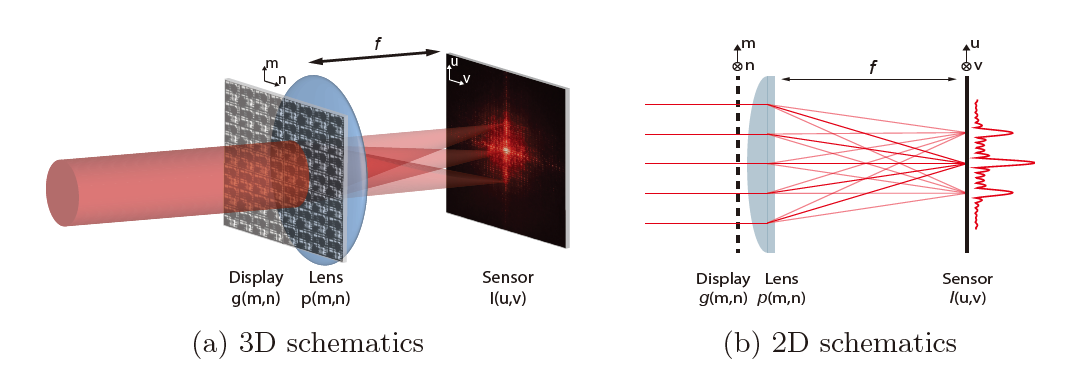

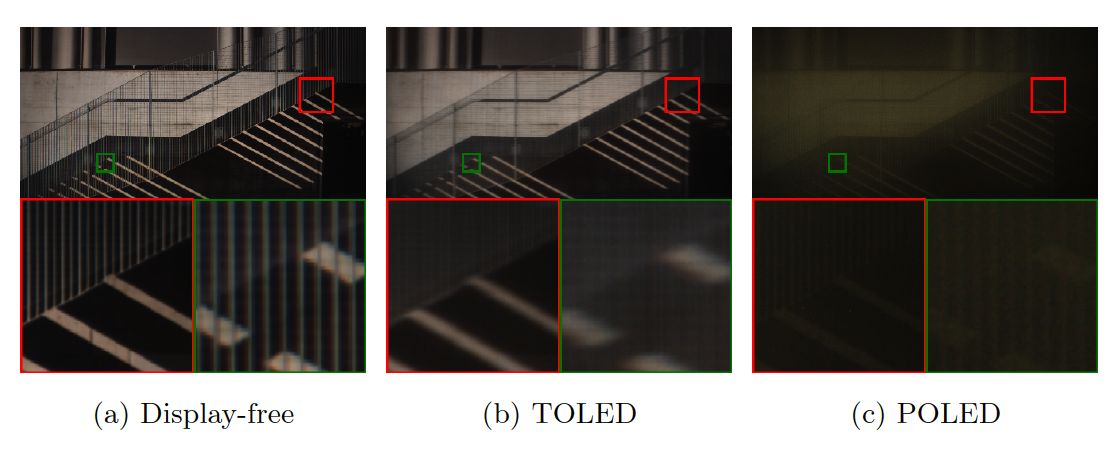

The new trend of full-screen devices encourages us to position a camera behind a screen. Removing the bezel and centralizing the camera under the screen brings larger display-to-body ratio and enhances eye contact in video chat, but also causes image degradation. In this paper, we focus on a newly-defined Under-Display Camera (UDC), as a novel real-world single image restoration problem. First, we take a 4k Transparent OLED (T-OLED) and a phone Pentile OLED (P-OLED) and analyze their optical systems to understand the degradation. Second, we design a novel Monitor-Camera Imaging System (MCIS) for easier real pair data acquisition, and a model-based data synthesizing pipeline to generate UDC data only from display pattern and camera measurements. Finally, we resolve the complicated degradation using learning-based methods. Our model demonstrates a real-time high-quality restoration trained with either real or the synthetic data. The presented results and methods provide good practice to apply image restoration to real-world applications.

UDC Dataset

The new dataset is collected by a Monitor-Camera Imaging System (MCIS). We utilized 300 images form DIV2K dataset. For each display type: T-OLED, and P-OLED, we collected paired display-free and display-covered imaging data in the form of both 16-bit RAW sensor data, and 8-bit RGB. Images have resolution of 1024*2048. We only release the paired RGB data for training. Our RGB is linear, so users can reverse the process to generate 8-bit RAW sensor data. Please refer to our report for more details of the dataset.

News

- Feb. 28, 2020: The UDC paper is accepted by CVPR 2021. The validation and testing dataset are released for evaluation.

- Aug. 30, 2020: The UDC ECCV Challenge Report is published in ECCV Workshop Proceedings. Congratulations to all the winners.

- March 21, 2020: We organized the Image Restoration for UDC Challenge in conjunction with the ECCV'20 Workshop and Challenge on Real-World Recognition from Low-Quality Inputs (RLQ). Winners were awarded with prize. The challenge is sponsored by Microsoft.

Download

We release the training and testing dataset the same as the held ECCV UDC challenge. The training data consists of 240 pairs of 1024*2048 images, totally 480 images. Validation inputs consist of 30 images of resolution 1024*2048, and construct in the form '.mat'. The key of the variable is 'val_display' and the variable is a 'uint8' array of size [30, 1024, 2048, 3]. You can first extract each image and test it using your pre-trained model. The testing dataset has the same structure as the validation data, but with another 30 images. For a fair comparison with other methods, the model should not be trained on the validation data, and the results should be reported on the testing data. We also prepare the evaluation code used in the challenge.

- UDC Training Dataset (RGB, 2.1G): [Microsoft OneDrive] [Google Drive] [Baidu Drive (pw:9k7q)]

- UDC Validation and Testing Dataset and evaluation codes (RGB, 1.2G): [Microsoft OneDrive] [Google Drive] [Baidu Drive (pw:zvyc)]

Citation

Image Restoration for Under-Display Camera. Zhou, Yuqian and Ren, David and Emerton, Neil and Lim, Sehoon and Large, Timothy. The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021. arXiv Bibtex

Licence

UDC dataset can only be used for research purposes. All the images are collected from DIV2K dataset. The copyright belongs to Microsoft and the original dataset owners.

Contact

Should you have any questions, please contact Yuqian Zhou via zhouyuqian133@gmail.com.